The frontend can read file content with FileReader, but reading large files (1-3 GB) causes noticeable jank. Here are the optimizations.

Cause of jank

If you read the entire file at once, all content is loaded into browser memory, which causes jank.

Solution

Slice the file and read chunks asynchronously.

const chunkSize = 1 * 1024 * 1024; // 1MB

function readChunk(file, start, chunkSize) {

const reader = new FileReader();

return new Promise(resolve => {

reader.onload = (evt) => {

// console.log(evt.target.result);

resolve(evt.target.result);

};

reader.readAsArrayBuffer(file.slice(start, Math.min(start + chunkSize, file.size)));

})

}

Comparison

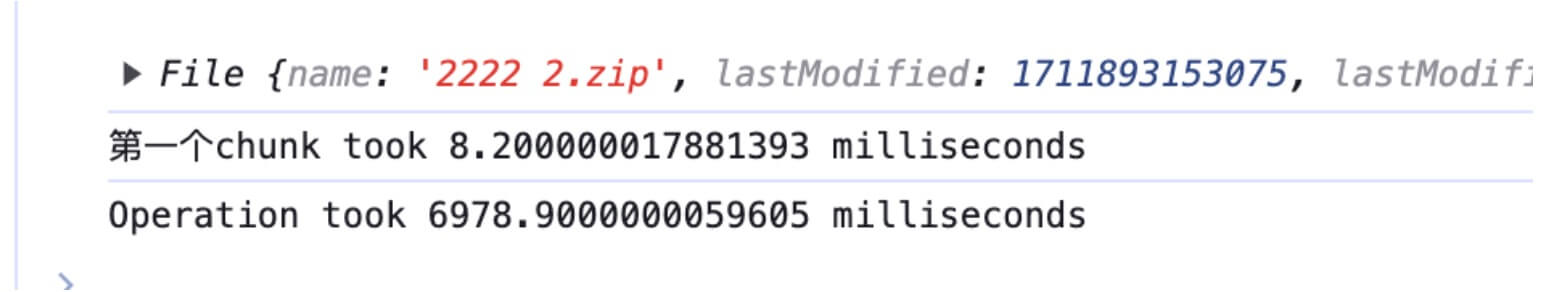

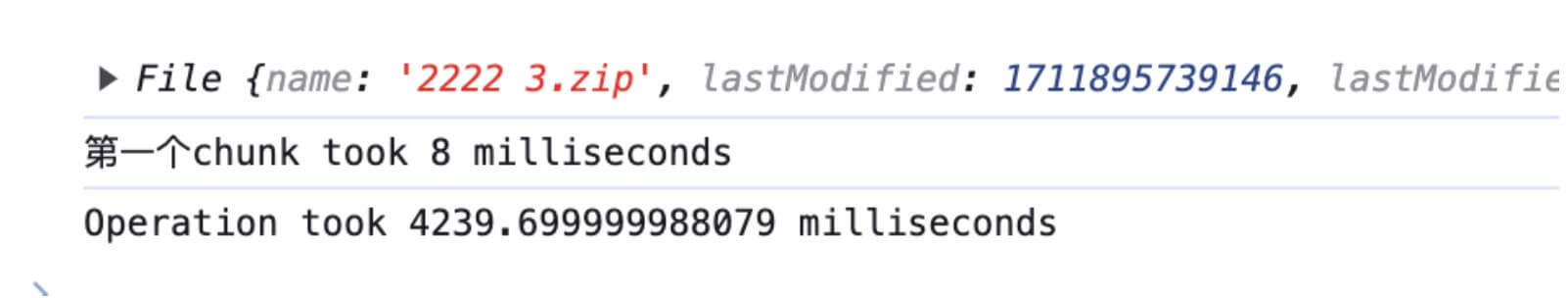

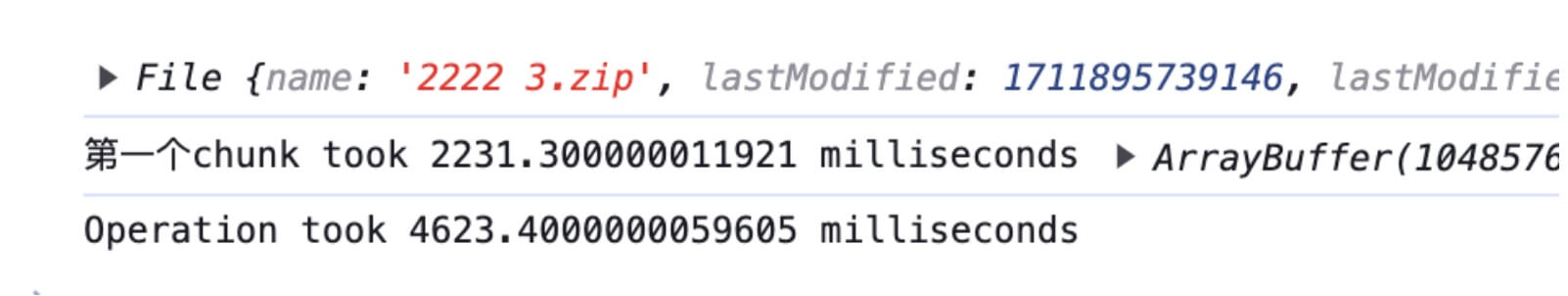

Using 2.3 GB data as an example:

Using FileReader.readAsArrayBuffer to read in 1 MB chunks, the time cost is below

Using FileReader.readAsArrayBuffer to read the full file, it never finishes

Using 1.98 GB data as an example:

- Chunked reading

- Full read

Conclusion

- Chunked reading saves performance and reads specific parts faster, though total time is not necessarily shorter. The larger the file, the bigger the gap.

- Reading files larger than 2 GB in one shot can be problematic in browsers.

Extension

When handling binary files on the frontend, you often run into Blob and ArrayBuffer. If you only need to slice data, Blob is recommended. Blob is just a view of the original data and does not load it, so for uploads you can slice the file and send chunks directly to the server. But if you need to read or modify file contents in the frontend, ArrayBuffer is recommended.